Artificial intelligence (AI) has quickly become one of the most promising new tools in healthcare. Not only has it been used to improve individual health outcomes by streamlining diagnosis and treatment, but recent studies have begun to explore its ability to improve public health outcomes as well.

Current research seeks to understand the potential of AI models to improve health inequity by addressing social determinants of health (SDOH). These models use several methods to identify, aggregate, and export SDOH data such as housing or employment status from thousands of doctor’s notes and other unstructured data in health records.

By leveraging AI, healthcare organizations can address the non-medical factors that influence health outcomes. For example, prediction models combining claims data with SDOH have been shown to improve risk stratification and inform targeted interventions for at-risk populations.

What are health inequities?

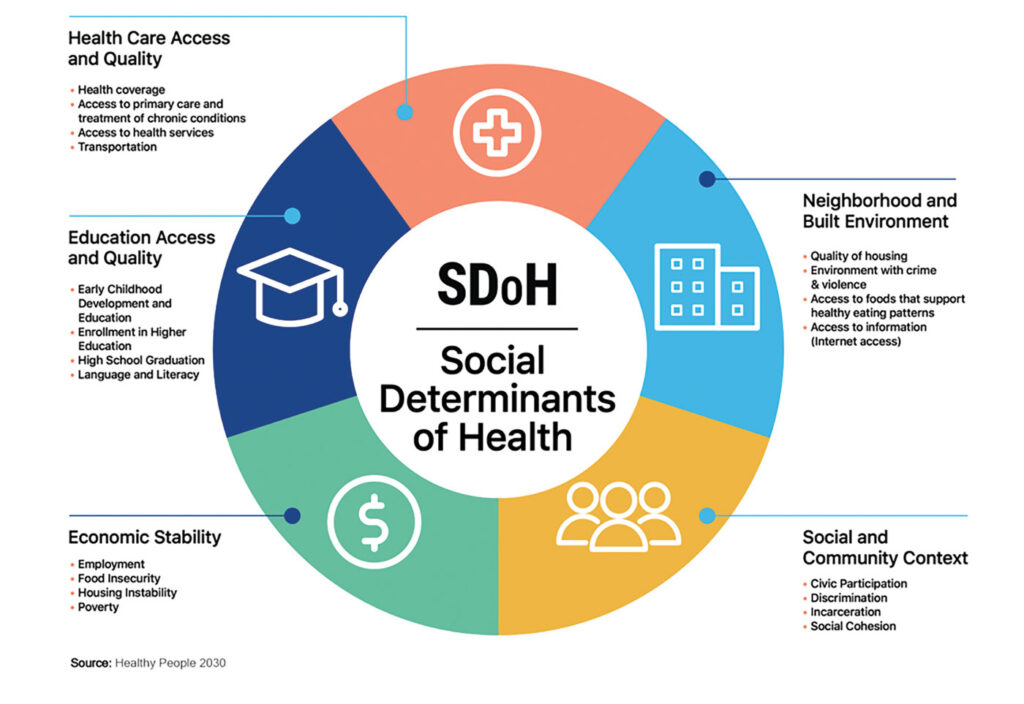

It is well known that some demographics are more at risk for health issues than others. These differences are referred to as health disparities. When these disparities can be attributed to systemic social conditions such as poverty and racism, however, these disparities become inequities. These socially driven differences in health outcomes can be mapped onto a variety of SDOH which influence how people work, live, and age from birth.

SDOH are generally grouped into five categories. The Centers for Disease Control and Prevention labels them as economic stability, education access and quality, health care access and quality, neighborhood and built environment, and social and community context. These forces affect economic policies, environmental policies, and political systems and thus, affect whole populations. Research also shows that SDOH can have greater impacts on individual health than some lifestyle factors. Addressing these is fundamental for improving public health outcomes and reducing longstanding inequities in health.

Why is it important to screen for SDOH?

Screening for SDOH allows healthcare providers and institutions to understand and address the “hidden” factors contributing to the health of their patients. This information allows for tailoring care to meet the specific needs of individuals and communities. It also helps in connecting patients with appropriate social services and community resources to address their unmet social needs. Some institutions have successfully used this data to pioneer community social support programs including rideshare programs to transport patients to their appointments and placing free HEPA filters in schools in heavily polluted areas.

How does AI help?

AI models are mathematical frameworks or algorithms that enable computers to perform complex tasks at a level that rivals human intelligence. These models are designed to continuously process data which allow them to learn and eventually make decisions based on that information.

A recent study published in Nature used large language models and varied machine learning techniques to locate and organize data about SDOH from text based doctor’s notes. Fine-tuned ChatGPT-family and Flan-T5 XL models were trained to identify six SDOH indicators, including employment and housing status, transportation access, and social support systems. At peak performance, the models were able to identify and flag almost 94% of SDOH indicators in patient records compared with only 2% captured by physician International Classification of Diseases (ICD) codes.

Other studies have used AI to develop risk stratification models. These models were trained to pull data from several sources like SDOH factors in doctors’ notes, insurance claims data, and intake and discharge forms to determine which patients were at the highest risk for future hospitalizations. This early identification can help streamline the delivery of care management resources to patients who can benefit the most.

Are there any risks?

As with all technology, AI is not without dangers and shortcomings, especially when applied in medical settings. To reach its full potential, these models must overcome human biases, regional population differences, and access barriers for application in low resource settings.

Machine learning builds upon layers of algorithms that must first be primed by humans. This also means that these models are susceptible to human biases. These biases arise from both the design of the algorithm itself and the data used to train it and can result in unintended consequences. Principal are privacy violations and the reinforcement of social prejudices like racism and classism—both of which are related to social determinants of health. Ongoing research should focus on ensuring that these processes comply with established ethical procedures to prevent the perpetuation of the very biases the technology is created to reduce. One such policy example is requiring patients to opt in or out of having their data mined by providing explicit consent.

One of the principal goals of AI models is to close inequity gaps in low-income populations both in the United States and globally. Unfortunately, the populations that stand to benefit the most from this technology may not have access to it. Many of the hospitals that serve low-income patients are underfunded and do not have the monetary resources or technical infrastructure to implement and maintain the necessary algorithms. In low-and-middle-income countries, there is an added barrier of making this technology portable so it may be readily used in the field. Finding ways to lower costs while maintaining the complexity and capacity of more expensive models is essential to ensure that the benefits are available regardless of location and socioeconomic status.

Differences in region, age, gender, and medical history also require nuance that AI does not always possess. For these models to be successful, they must be widely applicable and readily fine-tuned to serve various populations.

When these models are not made adaptable, they are prone to data shift, or a mismatch between the database used to train the model and the database the model is tasked with analyzing. For example, a model that is trained to diagnose heart problems using clinical data from white, male patients will have a lower rate of success when applied to non-white or female patients. To address the risk of data shift, developers must be committed to programming and training their models with diverse data. They must take special care to build in considerations for variables like race, urban versus rural settings, and special considerations for geriatric and pediatric populations.

As the functionality of AI continues to evolve, so do the challenges. Many studies have successfully applied AI to analyze text, but studies that have explored applications for imaging have been less successful. A recent study published in Radiology compared the accuracy of an AI program with human radiologists when tasked with identifying common lung diseases using x-rays. When detecting airspace disease, the model reported more false positives than the human radiologists, especially when tasked with identifying multiple conditions on the same x-ray. As it stands, some applications of AI are more appropriately used to augment human decision-making rather than replace it.

AI has a wide array of clinical applications, from diagnosing disorders to screening for SDOH. This information helps doctors identify patients who are at higher risk for poor health outcomes and connect them to resources. This also aids large medical systems in developing programs and targeted interventions that can impact whole communities. AI, however, carries a risk of inadvertently harming vulnerable patients, such as those who are from low-income or racial minority populations. For this technology to provide maximal benefit, careful considerations for privacy and demographic and geographic differences must be made. It is crucial that medical ethics policy and protection evolve in tandem with these new technologies and that these models are continuously evaluated for biases and data shift complications. With the proper training, however, AI could be a key element for eliminating health disparities.

Read more:

- The State of Health Disparities in the United States, January 2017

- Social Determinants of Health

- Case Study: Denver Health Medical Center Collaborates with Lyft to Improve Transportation for Patients, March 2018

- Free Air Purifiers for Early Childhood Education Centers and Schools! April 2023

- Large language models to identify social determinants of health in electronic health records, January 2024

- Improving Risk Stratification Using AI and Social Determinants of Health, November 2022

- Privacy and artificial intelligence: challenges for protecting health information in a new era, September 2021

- Commercially Available Chest Radiograph AI Tools for Detecting Airspace Disease, Pneumothorax, and Pleural Effusion, September 2023

This article was originally published on Inside Precision Medicine and can be read online here.